Assessment was never meant to slow learning down, yet that’s often what it does. Traditional grading delays feedback, sometimes by days, sometimes longer, and that gap quietly erodes student learning and retention. By the time feedback arrives, the moment has passed. The thinking has cooled. Whatever lesson was there now feels distant.

Feedback quality, meanwhile, remains one of the strongest predictors of student performance and achievement. The problem is not intent. It’s capacity.

Large classes and growing administrative tasks leave little room for timely, detailed feedback, even when educators know exactly what students need. Periodic testing adds another constraint. Snapshot assessments offer only partial insight into student growth, missing patterns that unfold over time.

Educational institutions are under pressure to scale assessment without sacrificing rigor. That tension explains why AI in education entered the conversation at all. AI tools emerged to address speed, scale, and consistency gaps in assessment workflows.

To see why that matters, it helps to look next at what actually changes when feedback stops arriving late and starts arriving on time.

What Changes When Feedback Shifts From Delayed to Immediate?

The shift feels subtle at first. Then it compounds. Immediate feedback improves learning outcomes because it keeps the learning process active.

When AI provides instant feedback, long waits associated with traditional grading disappear. Students see what worked, what didn’t, and why, while the task is still fresh.

Real-time feedback plays a crucial role here. It prevents misconceptions from taking root by correcting errors before they repeat. Instead of practicing mistakes, students adjust in the moment.

Over time, that changes how learning unfolds. Feedback becomes continuous rather than episodic, supporting steady student growth instead of stop-and-start progress.

There’s also a motivational effect that’s easy to overlook. Timely feedback supports student engagement because effort and response stay closely linked.

Research consistently shows that when feedback arrives quickly, student satisfaction and persistence improve. You stay with the task longer. You’re more willing to revise, reflect, and try again.

This change in timing sets the foundation for everything else AI enables in assessment. Once feedback moves into real time, the next question becomes how AI systems actually generate it, and what they are doing behind the scenes to make it possible.

How Do AI Assessment Systems Actually Analyze Student Work?

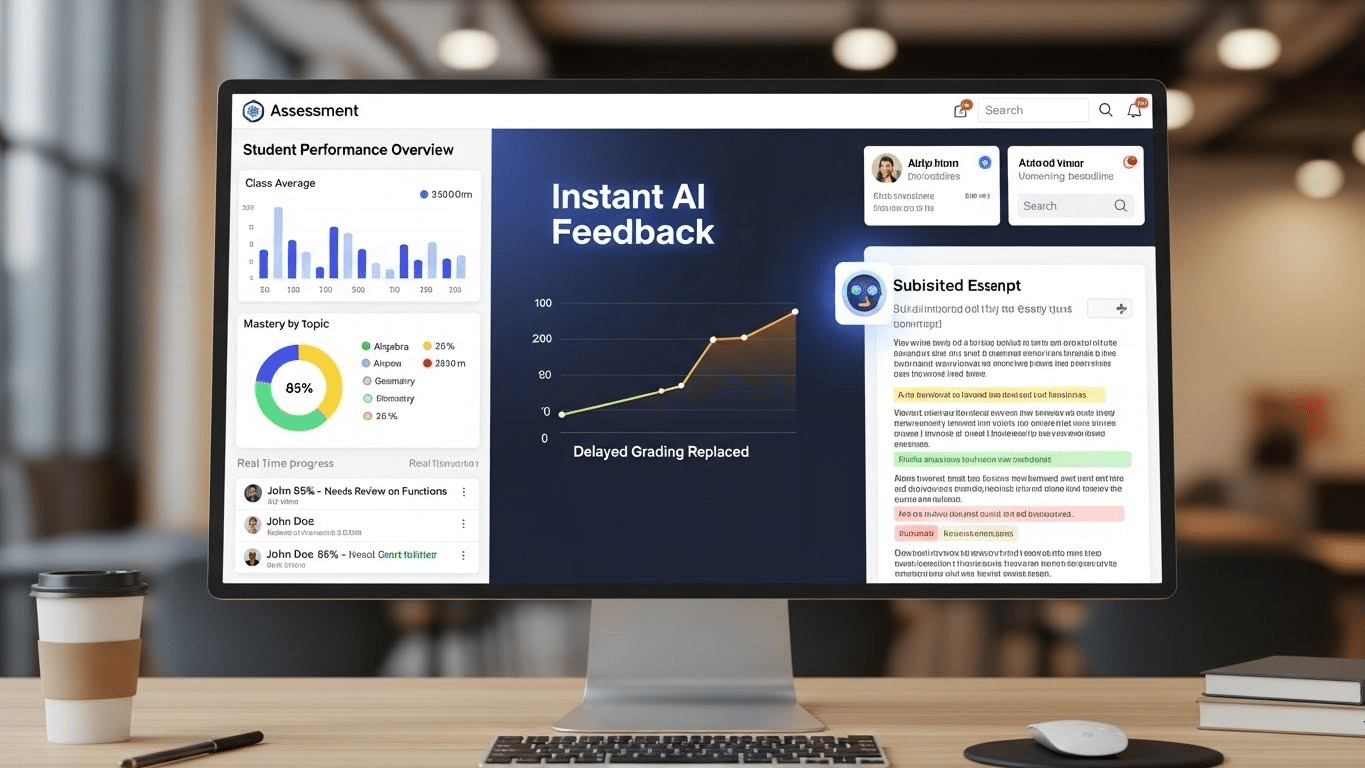

It starts faster than you might expect. The moment student work is submitted, AI assessment systems begin analyzing it in real time. Not later. Not overnight. Right then, while the thinking is still warm.

Artificial intelligence relies on two core capabilities here. Natural Language Processing looks closely at written work, evaluating grammar, syntax, coherence, argument strength, and even how evidence is used. It is not just counting errors.

It is examining structure and meaning. Machine learning adds another layer by detecting learning patterns across large datasets. Over time, these models learn what strong work looks like, where students tend to struggle, and which feedback leads to improvement.

Consistency matters. AI applies assessment criteria uniformly, reducing human bias and fatigue that naturally build up during long grading sessions. Automated grading tools can assess assignments instantly and at scale, something traditional grading simply cannot match.

Behind the scenes, this typically includes:

- NLP-driven written corrective feedback that supports revision and clarity

- Machine learning analysis for trend detection and predictive insights

- Real-time dashboards that surface actionable insights for educators

Once analysis becomes this immediate and structured, feedback quality begins to change in noticeable ways.

In What Ways Does AI Improve Feedback Quality Compared to Traditional Grading?

Feedback quality often suffers not from lack of care, but from lack of time. AI shifts that balance. AI-generated feedback is objective, consistent, and criteria-driven. Every student is measured against the same standards, every time. There is no late-night fatigue. No uneven attention.

Traditional grading, by contrast, is rich but variable. Teachers bring insight and context, yet workload and time pressure inevitably affect depth and consistency.

AI reduces fatigue-related grading errors by handling the mechanical aspects reliably. It also provides detailed, line-level feedback across entire classes, not just a few highlighted issues.

The result is feedback that feels more actionable. Students receive specific guidance rather than broad summaries. They know what to revise and where to focus next.

The contrast is clearest when viewed side by side:

- Consistency vs human variability, where AI applies rules uniformly

- Speed vs limited availability, where AI responds instantly

- Scale vs manual constraints, where AI supports entire cohorts

With quality stabilized, personalization becomes the next frontier.

How Can AI Personalize Assessment and Feedback for Individual Students?

Personalization begins with attention to detail. AI assesses each student’s strengths and weaknesses by analyzing responses over time, not just in isolation. Patterns emerge. Gaps become visible. Progress becomes measurable.

Personalized learning paths adapt to learning styles and pace, allowing students to move forward when ready and slow down when needed. Adaptive testing adjusts difficulty in real time, responding to performance rather than locking everyone into the same sequence. Feedback is tailored to individual student needs, not averaged across a class.

This approach changes outcomes. Personalized feedback improves engagement, retention, and achievement because it feels relevant. Students are no longer correcting abstract mistakes. They are responding to guidance that reflects their actual work.

Supporting mechanisms often include:

- Adaptive learning technologies that adjust content dynamically

- Personalized student support driven by analytics

- Targeted feedback aligned to individual learning patterns

Once personalization is in place, assessment itself begins to evolve.

Which Assessment Models Become Possible With AI That Were Hard Before?

AI expands what assessment can look like. Computerized Adaptive Testing adjusts questions dynamically, responding to student performance instead of forcing a fixed path. Dynamic mastery checks replace one-time exams, offering multiple chances to demonstrate understanding.

Continuous assessment models reduce test anxiety by spreading evaluation across learning activities. AI supports online assessments and virtual tutors that guide students through problem-solving rather than simply scoring outcomes. Real-time monitoring of student progress replaces periodic snapshots with ongoing insight.

Perhaps most striking is the evaluation of complex skills. AI now assesses clinical reasoning in medicine, coding logic in computer science, and other applied competencies that were once difficult to measure at scale. Assessment software becomes less about ranking and more about understanding how students think and apply knowledge.

As assessment models evolve, engagement shifts as well.

How Does AI Improve Student Engagement and Motivation During Assessment?

Engagement grows when feedback feels immediate and relevant. Instant feedback increases student engagement by keeping effort and response closely connected. You act, you see the result, and you adjust. That loop encourages persistence.

Interactive assessments promote active learning rather than passive completion. AI prompts reflection and metacognition by asking students to reconsider choices or explore alternatives.

Progress tracking becomes clearer and more frequent, helping students see growth instead of guessing at it. AI-driven assessments also influence satisfaction. When students understand where they stand and what to do next, motivation tends to rise.

Common engagement signals include:

- Feedback response patterns, showing how students revise and persist

- Motivation and persistence, reflected in continued effort

- Growth indicators, visible through repeated improvement

With engagement strengthened, the broader impact of AI on assessment workflows and educator roles comes into focus next.

How Can AI Help Educators Identify At-Risk Students Earlier?

Trouble rarely announces itself all at once. It shows up quietly. A missed assignment here. A pattern of small errors there. AI helps surface those signals before they harden into outcomes.

Using data analytics, AI systems analyze engagement, attendance, error frequency, and completion rates across time. Predictive analytics can identify at-risk students weeks in advance, long before a failing grade appears.

This is not guesswork. It’s pattern recognition applied at scale. Dashboards bring these insights together, highlighting common misconceptions and emerging struggles across a class or cohort.

That visibility changes how educators respond. Instead of reacting late, they can intervene early. Support becomes targeted rather than generic. Instructional strategies shift proactively, not defensively.

Common indicators AI tracks include:

- Engagement drops, such as reduced interaction or delayed submissions

- Repeated errors, pointing to unresolved misconceptions

- Completion trends, signaling loss of momentum

Early intervention improves student achievement because it preserves opportunity. Once educators can see risk clearly, they can act while there is still time to help.

What Administrative Burdens Does AI Remove From Assessment Workflows?

Assessment has always carried hidden labor. AI makes much of it visible, then quietly removes it. One of the clearest impacts is workload. AI-powered tools can reduce grading workloads by approximately 70%, a change that reshapes how educators spend their time.

Administrative tasks such as grading, data collection, and basic analysis are automated. That does not eliminate oversight, but it removes repetition. Teachers regain time for instruction, mentoring, and direct student interaction. The parts of teaching that require presence rather than processing.

AI also supports curriculum development and lesson planning by organizing assessment data into usable patterns. Instead of sorting spreadsheets, educators focus on teaching strategies informed by real evidence. The workday shifts. Less time managing tasks. More time guiding learning.

Efficiency here is not about speed for its own sake. It is about freeing educators to do the work that only humans can do well.

How Does AI Support Accessibility and Inclusion in Student Assessment?

Access shapes outcomes. AI helps widen that access in practical ways. AI graders now support over 80 languages, allowing students to engage with assessments in their preferred language.

Speech recognition tools support multilingual learners and those developing language skills, reducing barriers that have little to do with understanding the subject itself.

Accessibility extends further. AI enhances assessment for students with disabilities by adapting formats, pacing, and delivery. Material that was once inaccessible becomes usable. That matters more than it sounds.

Key contributions include:

- Speech recognition, supporting language learning and alternative input

- Multilingual assessment, expanding equal access

- Adaptive formats, improving usability for diverse needs

Inclusive learning environments improve equity and outcomes because assessment reflects ability rather than circumstance. AI does not create inclusion on its own, but it can remove obstacles that have long been treated as unavoidable.

What Ethical Risks Must Be Managed When Using AI for Assessment and Feedback?

Power brings responsibility. AI assessment systems depend on data, and data demands care. Data privacy and security require encryption, clear policies, and limits on use. Without them, trust erodes quickly.

Algorithmic bias presents another risk. If training data is narrow or skewed, AI can distort educational outcomes rather than improve them. Transparent AI policies help address this by making system behavior visible. Regular bias audits reduce inequality risks, but only when they are treated as ongoing work, not a checkbox.

There is also a human concern. Overreliance on AI may reduce interaction if systems replace conversation instead of supporting it. Academic misconduct risks, including misuse and plagiarism, require monitoring as well.

Ethical use is not about slowing innovation. It is about setting guardrails so progress does not outrun judgment.

How Can Institutions Implement AI Assessment Tools Responsibly?

Responsible implementation begins before the tool is turned on. Structured training programs are essential so educators understand not just how to use AI, but how to question it. Clear governance and timelines support adoption by defining roles, oversight, and accountability from the start.

Cost matters too. High implementation costs must be evaluated honestly, especially for institutions with limited resources. Professional development builds AI literacy, helping educators interpret results rather than accept them blindly.

Practical foundations include:

- Defined governance models, clarifying responsibility

- Training and support, ensuring confident use

- Ongoing evaluation, adjusting systems as needs change

When institutions implement AI assessment tools responsibly, learning outcomes improve because technology aligns with pedagogy. The goal is not automation. It is alignment.

How Can PowerGrader Enable Scalable, High-Quality Assessment and Feedback?

Scale is where assessment usually breaks. Good intentions collapse under volume. PowerGrader is designed to prevent that collapse by keeping feedback fast, consistent, and human-led.

PowerGrader provides instructor-controlled AI-generated feedback, not automated judgment. Educators define assessment criteria. AI applies them consistently. That separation matters.

It reduces workload without loosening standards. Real-time written corrective feedback appears during the revision process, allowing students to respond while learning is still active.

Pattern detection across cohorts adds another layer of value. Instead of discovering gaps after exams, instructors see trends as they form. Common misconceptions surface early. Instruction adapts sooner. And because PowerGrader follows a human-in-the-loop governance model, educators can review, adjust, or override AI feedback at any point.

The result is not faster grading alone. It is maintained rigor at scale, where feedback quality holds steady even as class sizes grow. That balance is what makes AI usable in real educational settings.

What Does the Future of AI-Driven Assessment and Feedback Look Like?

The direction is already visible. AI continues to improve speed, accuracy, and personalization, tightening the feedback loop that drives learning forward. Assessment moves away from isolated events and toward continuous, adaptive models that reflect how students actually learn.

What does not change is the role of educators. Teachers remain central decision-makers, setting standards, interpreting context, and guiding growth. Responsible AI strengthens education systems when it supports judgment instead of replacing it.

Making education more responsive does not require abandoning human insight. It requires better tools, clearer boundaries, and thoughtful use. When implemented responsibly, AI-driven assessment improves student learning outcomes not by doing more teaching, but by making teaching more effective.

Frequently Asked Questions (FAQs)

1. How can AI improve student assessment and feedback?

AI improves assessment by providing immediate, consistent, and personalized feedback, helping students understand strengths and weaknesses while allowing educators to act on insights faster.

2. Is AI-based assessment more accurate than traditional grading?

AI enhances accuracy by applying assessment criteria consistently and reducing human fatigue, though final evaluation and contextual judgment remain essential human responsibilities.

3. Can AI-generated feedback replace teachers?

No. AI supports assessment workflows and feedback delivery, but educators retain authority over evaluation, instructional decisions, and meaningful student interaction.

4. How does AI help students learn more effectively?

AI provides real-time feedback, adaptive assessments, and personalized learning paths that help students correct mistakes early and stay engaged throughout the learning process.

5. What are the main risks of using AI in assessment?

Risks include data privacy concerns, algorithmic bias, overreliance on automation, and reduced human interaction if systems are poorly governed.

6. How does AI support large or diverse classrooms?

AI scales feedback across large classes, supports multilingual learners, and improves accessibility, helping deliver more equitable assessment experiences.

7. What makes PowerGrader different from generic AI grading tools?

PowerGrader keeps instructors in control, applies criteria consistently, detects learning patterns across cohorts, and reduces workload while preserving academic rigor.